PlayerZero achieves 18x faster code reasoning on turbopuffer

PlayerZero switched from pgvector to turbopuffer, reducing LLM code retrieval and reasoning from 3+ minutes to 10 seconds, improving search recall, and paving the way for a more ambitious product roadmap.

18x

faster retrieval and reasoning

800M+

documents

25k+

namespaces

We went from generating insights in 3 minutes to less than 10 seconds, with much better recall.

Maria Vinokurskaya, Founding Engineer

Why turbopuffer?

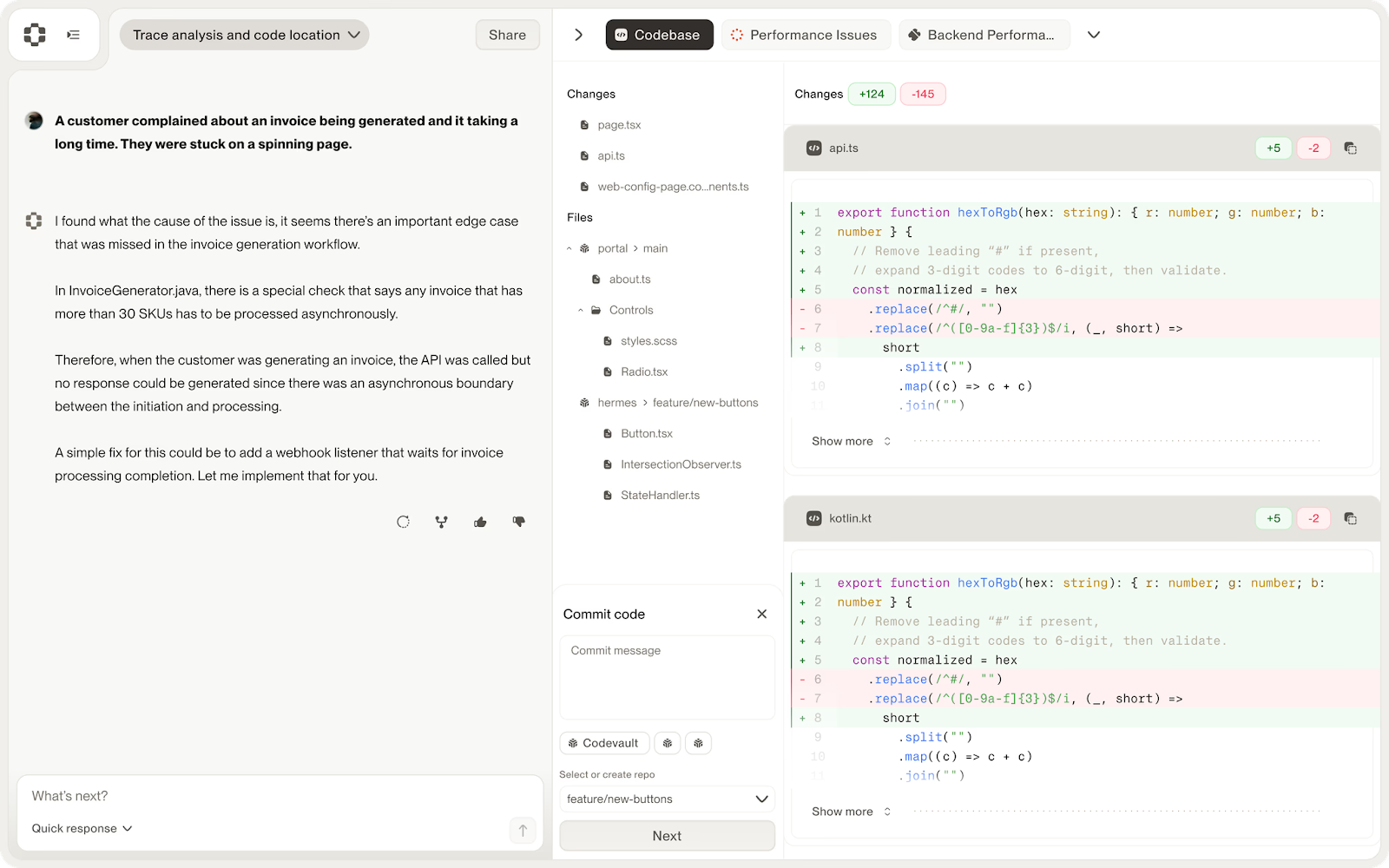

PlayerZero predicts, diagnoses, and fixes software issues in large codebases, automatically. The platform builds a complete understanding of a customer's system - code, telemetry, tickets, user sessions - then applies AI to investigate and solve problems.

Initially PlayerZero used Postgres with pgvector to store and retrieve codebase embeddings, but retrieval became unreliable with scale. Even after sharding their main table and applying index hinting, retrieval queries took 30s or more, recall was poor, and a single language model interaction could take more than 3 minutes to arrive at a conclusion.

turbopuffer gave PlayerZero serverless vector search that was both faster than pgvector and cheaper than peers, backed by responsive engineering support.

turbopuffer design support → 75% lower write costs

PlayerZero maps namespaces to codebases, and each branch in the repo has its own turbopuffer namespace. They initially maintained a table that mapped embeddings to the branches to which they belonged. When a new branch was created and they needed to index it, they’d have to upsert all rows touched by the branch. Any time a branch was created or deleted, they’d then have to do nearly as many updates as there were embeddings.

Rather than individually upsert rows on new branches, the turbopuffer team suggested an alternative approach: achieve namespace-per-branch with copy_from_namespace for data sharing between branches.

Because branches share most code, copying a namespace server-side and modifying only what’s needed to resolve the diff was both faster and cheaper than re-upserting documents for branches. PlayerZero reduced write costs for branch operations by 75% and with simplified write patterns with this approach.

Results

- 18x faster LLM codebase retrieval and reasoning than with pgvector

- 94% lower initial cost when benchmarked against a peer

- 800M+ documents, 25K+ namespaces, 4 TB storage → still sub-second retrieval

turbopuffer makes us more ambitious with our product roadmap. We can move faster, iterate, and test different ideas.

Maria Vinokurskaya, Founding Engineer

turbopuffer in PlayerZero

PlayerZero uses turbopuffer to semantically search user codebases, offering a debugging pane where LLMs reason over the code in response to a user debug query. Because turbopuffer provides significantly faster retrieval times with better recall, PlayerZero has reduced time-to-insight by 18x.

Up next for PlayerZero

Hybrid search is next on the roadmap for PlayerZero, and one of the reasons they chose turbopuffer. While they’re still relying primarily on vector search for code retrieval, they intend to start testing hybrid vector + full-text search powered by turbopuffer with rank fusion to see if it can improve recall for new use cases. As their usage evolves, we will update this customer log.